Ground

Adaptive pathfinding highlights points of cover, ascent grades, and route confidence. Operators receive predictive traction bands and slope warnings in real time.

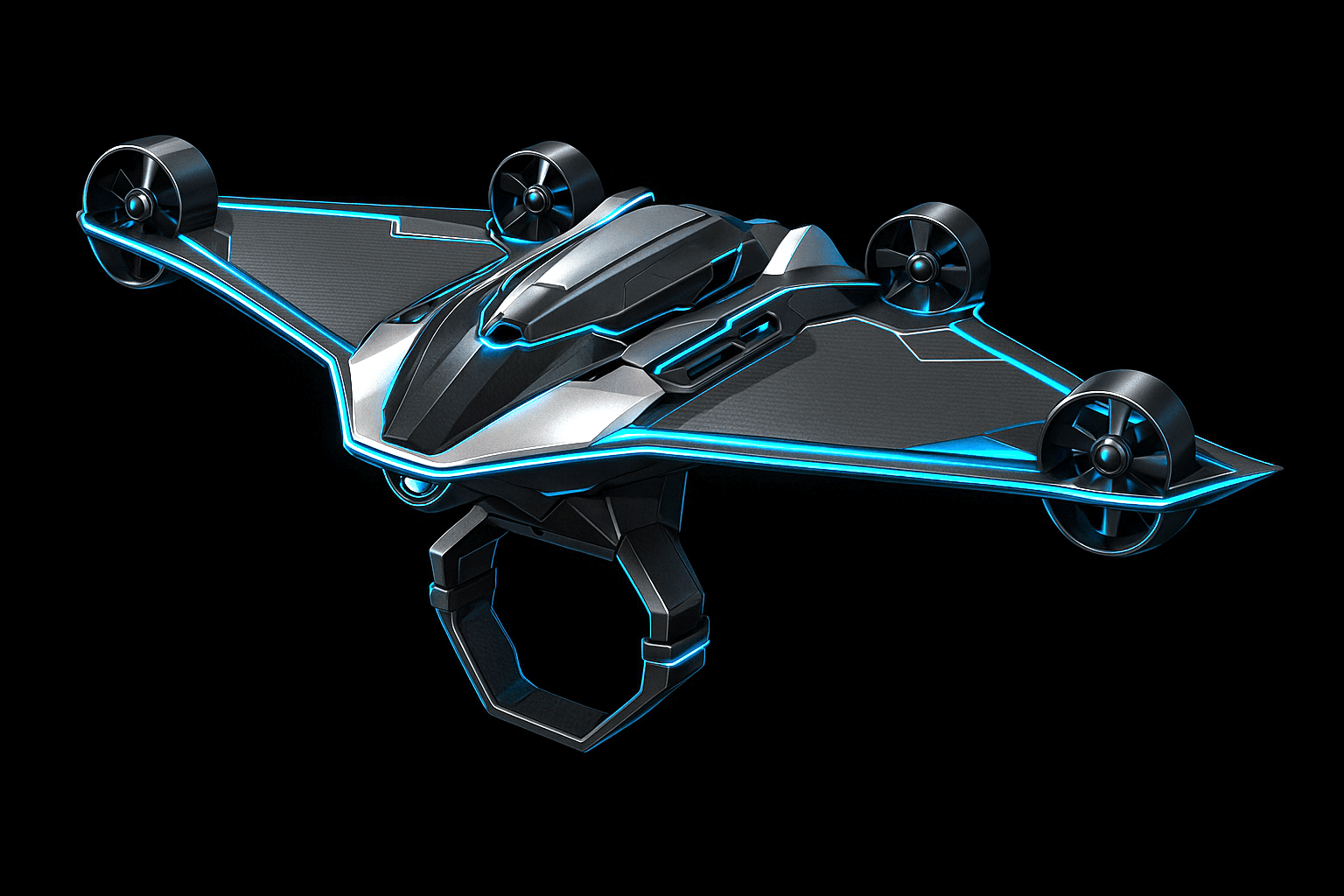

Onboard intelligence that turns defense robots into autonomous agents able to coordinate across land, air, and sky.

Collaborative autonomy that connects perception, language, and action in real time. Enyak AI runs at the tactical edge without reliance on cloud connectivity, enabling high tempo operations in contested domains.

Each domain view renders live terrain and mission overlays inspired by the Enyak AI control stack. The visuals mirror the topographic language from the demo with dedicated telemetry for every platform.

Adaptive pathfinding highlights points of cover, ascent grades, and route confidence. Operators receive predictive traction bands and slope warnings in real time.

Airspace deconfliction visualizes wind shear corridors, uplink status, and altitude envelopes. Swarm links glow when agents share a common vector.

Tactical picture unifies threat vectors and runway briefs. Elevation gates and ingress corridors animate when Enyak shares a tasking update.

Vision-Language-Action models trained on simulation and human demonstration data connect perception to execution. Enyak AI fuses voice, vision, and mission context so teams of autonomous agents can reason together.

A first-of-its-kind AI model for multi-domain defense

Platform-agnostic autonomy that scales from single vehicle operations to coordinated multi-domain teams.